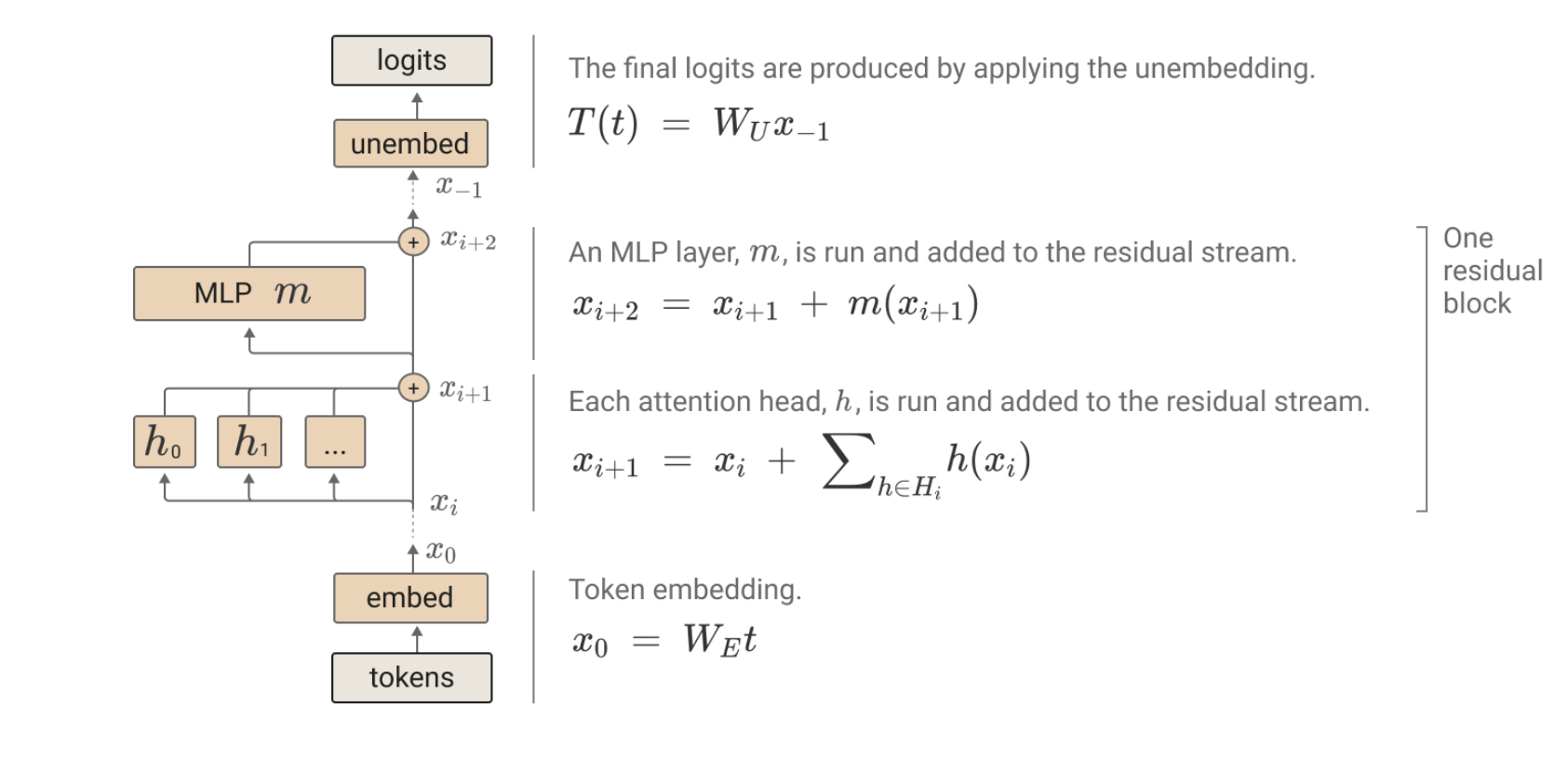

Mathematical Framework of Transformers;

Notes: No MLP layers No bias No layer norm

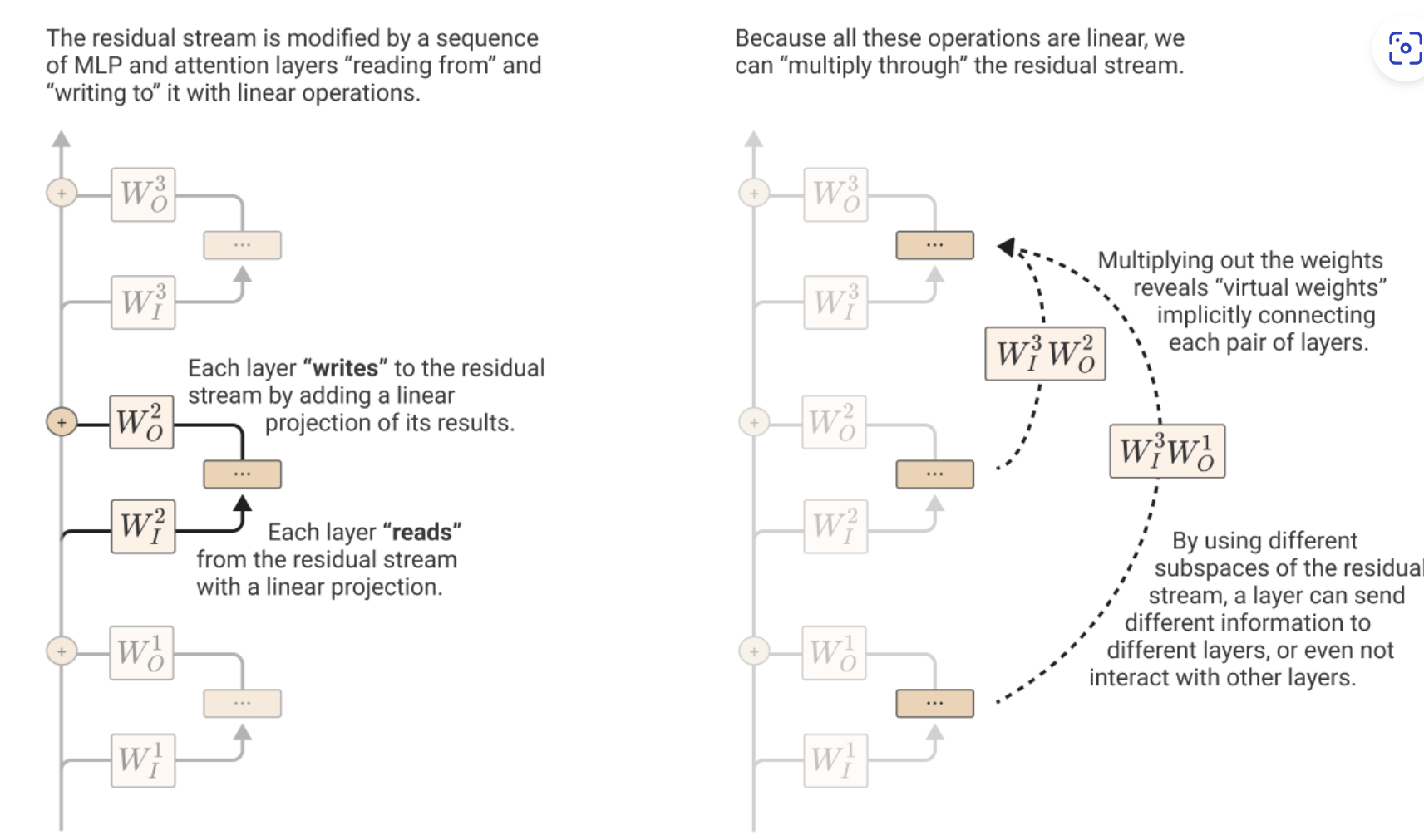

”attention and MLP layers each “read” their input from the residual stream (by performing a linear projection), and then “write” their result to the residual stream by adding a linear projection back in”

Residual stream: The residual stream is simply the sum of the output of all the previous layers and the original embedding.

Only linear operations are done to the Residual stream